This iPython notebook is an implementation of a popular paper (Gatys et al., 2015) that demonstrates how to use neural networks to transfer artistic style from one image onto another.

The implementation is slightly modified to use Densenet instead of VGG-Net and an additional regularization term is also added to the overall loss function.

The main objective of the algorithm is to merge two images, namely content image (C) and style image (S) to create a generated image (G) , which combines the content of the image C with the style of the image S.

For example, we can see the stylized image from the Taj Mahal (content image C), mixed with a painting by Van Gogh (style image S).

The algorithm uses neural representations which are obtained by using Convolution Neural Networks (CNN), which are most powerful in image processing tasks. A CNN consists of a number of convolutional and subsampling layers optionally followed by fully connected layers, where each layer can be understood as a collection of image filters, each of which extracts a certain feature from the input image also called as feature maps.

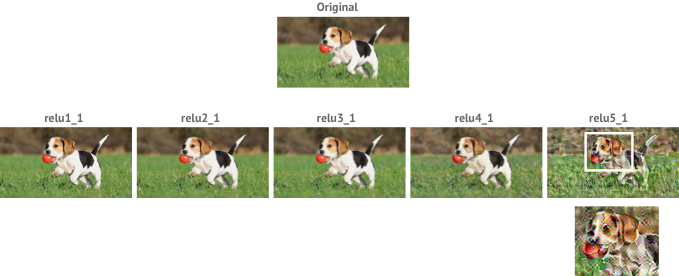

On training CNN for object recognition, along the processing hierarchy of the network, the feature maps represents more actual content of the image compared to its detailed pixel values. This can be visualized by reconstructing the image only from feature maps. This is called content reconstruction.

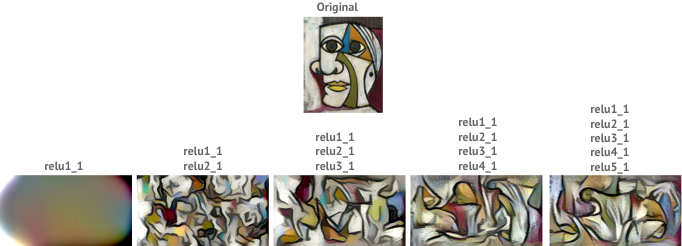

By including the feature correlations of multiple layers, we obtain a style representation of the input image, which captures its texture information but not the global arrangement. This representation captures general appearance in terms of colour and localised structures. This can be visualized by reconstructiing the image from feature maps showing texture information. This is called style reconstruction.